Feature Splatting for Better Novel View Synthesis with Low Overlap

3D Gaussian Splatting

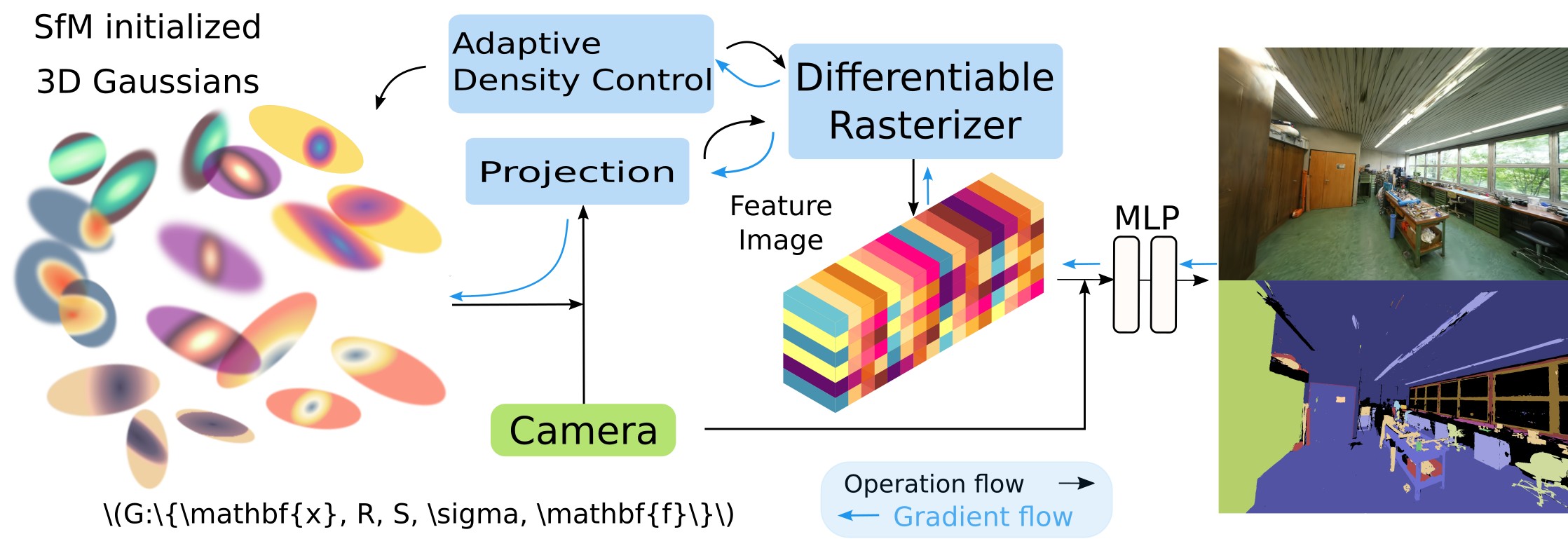

In this paper, we propose to encode the color information of 3D Gaussians into per-Gaussian feature vectors, which we denote as Feature Splatting (FeatSplat). To synthesize a novel view, Gaussians are first “splatted” into the image plane, then the corresponding feature vectors are alpha-blended, and finally the blended vector is decoded by a small MLP to render the RGB pixel values. To further inform the model, we concatenate a camera embedding to the blended feature vector, to condition the decoding also on the viewpoint information. Our experiments show that these novel model for encoding the radiance considerably improves novel view synthesis for low overlap views that are distant from the training views. Finally, we also show the capacity and convenience of our feature vector representation, demonstrating its capability not only to generate RGB values for novel views, but also to modify scene light after optimization, and to learn per-pixel semantic labels.

@article{martins2024feature,

title={Feature Splatting for Better Novel View Synthesis with Low Overlap},

author={T. Berriel Martins and Javier Civera},

year={2024},

eprint={2405.15518},

archivePrefix={arXiv},

primaryClass={cs.CV}

}